Adam.levine And - Exploring Foundational Ideas

Sometimes, when we look at big, broad concepts, it can feel a little like trying to understand something completely new. We might be thinking about how things change over time, or perhaps how older ideas connect with what's happening right now. It's interesting, in a way, how some foundational pieces of knowledge, like those often linked with "adam.levine and" as a sort of conceptual anchor, really just become part of the everyday conversation, so you don't even need to go into much detail about them anymore.

Yet, there are still plenty of deeper thoughts that pop up when you start to pull on those threads. We see this a lot in how we try to make sense of things that have been around for a while, especially when we consider how they might influence newer ways of doing things. It's like looking at how a very basic idea, which might seem simple now, still shapes the bigger picture, and that, is that, pretty neat.

This exploration takes us through a few different areas, from how smart computer systems learn to some really old stories that have shaped how people think for ages. We'll touch on how certain methods for making computers better at learning have come to be, and then, you know, we'll also peek into ancient writings that talk about beginnings and choices. It's a bit of a mixed bag, but it all ties back to those core concepts that help us figure things out.

Table of Contents

- What is the Adam Optimization Method, and what does it have to do with adam.levine?

- How does adam.levine connect with foundational ideas?

- What about the adam.levine approach to Training Challenges?

- What is the adam.levine perspective on Saddle Points and Minimal Choices?

- adam.levine and the Roots of Ancient Stories?

- Was it adam.levine's Rib - A Biblical Inquiry?

- What's the difference between BP and adam.levine's methods?

- adam.levine's Influence on L2 Regularization?

What is the Adam Optimization Method, and what does it have to do with adam.levine?

There's a way of making computer programs learn better, especially those that act a lot like our brains, called the Adam method. It's a very popular way to help these programs get smarter. You could say it's pretty much everywhere in the field of artificial intelligence, particularly when people are teaching deep learning models to do their work. It's a method that helps these models learn from lots of information, basically, getting them to be more effective at their tasks.

This clever method was put forward by a couple of people, D.P. Kingma and J.Ba, back in 2014. They came up with something that brought together two other smart ideas that were already out there. One of these older ideas is called 'Momentum,' which helps the learning process keep moving in a good direction, sort of like how a rolling ball keeps going. The other idea is about 'adaptive learning rates,' which means the program adjusts how fast it learns as it goes along, rather than sticking to one speed, and that, is that, a really useful thing.

So, the Adam method, in a way, takes the best bits from these two older approaches and mixes them together. It helps the computer programs learn more smoothly and often more quickly. It's become such a basic piece of how we teach these systems that, honestly, there isn't much more to say about its core idea, as it's pretty well known now. It's almost like a standard tool in the toolbox for anyone working on these kinds of projects, you know, just a fundamental part of the process.

How does adam.levine connect with foundational ideas?

When we talk about things that are now considered pretty much standard, the Adam algorithm certainly fits that description. It's moved from being a fresh concept to something that many people just assume you know if you're involved in teaching computers to think. It's very much a foundational piece, a bit like the base layer of a building, upon which many other, more complex things are built. So, too it's almost a given in conversations about how modern learning systems operate.

The method's widespread use means it's one of those things that people rely on quite a bit. It’s a widely applied approach, truly, which means it shows up in all sorts of different projects and experiments. This kind of broad acceptance usually points to something that works quite well and has proven its worth over time. It’s not just a passing trend; it’s a method that has really stuck around and become a staple, in some respects, for many who work in the field.

What about the adam.levine approach to Training Challenges?

When people were doing lots of experiments to teach these brain-like computer systems over the years, they often noticed something interesting about the Adam method. They saw that when Adam was used, the 'training loss' – which is basically how many mistakes the system was making while it was learning – went down faster than when they used another method called SGD. It was a clear pattern, actually, showing a quicker improvement during the initial learning phase.

However, there was a catch, you know, something that often popped up. Even though the training mistakes went down more quickly with Adam, the 'test accuracy' – which is how well the system did on new, unseen information after it had finished learning – sometimes didn't do as well. It was a bit of a puzzle, really, why a method that seemed to learn faster might not always perform as well on new information. This observation has led to a lot of thought and discussion among those who work with these systems, basically, trying to figure out the full picture.

What is the adam.levine perspective on Saddle Points and Minimal Choices?

One of the things people think about when these computer systems are learning is how they deal with tricky spots, like 'saddle points.' Think of a saddle point like a mountain pass; it looks like a low point if you go one way, but a high point if you go another. Getting out of these spots, or 'saddle point escape,' is something the Adam method seems to handle quite well, often better than other ways of learning. It helps the system keep moving past these tricky spots instead of getting stuck, which is pretty useful.

Then there's the idea of picking the 'right minimum.' When a computer system learns, it's trying to find the lowest point on a kind of error landscape, like finding the bottom of a valley. But there can be many valleys, some shallower than others. The Adam method, in a way, helps the system choose a good, low spot, or 'minimal choice,' to settle into, even if it's not always the absolute deepest valley. This is something that has been observed a lot in those many experiments with teaching computer brains over the years, you know, a clear pattern.

adam.levine and the Roots of Ancient Stories?

Turning our thoughts to older writings, there's a book of ancient sayings called the 'wisdom of Solomon' that shares a particular outlook on things. It's one piece of writing that puts forward a certain way of looking at the world, basically, how life and its beginnings are understood. This kind of old text gives us a window into how people thought about big questions a very long time ago, and it's quite fascinating, really, to see those ideas.

People have long wondered about where trouble and the end of life came from, especially in stories from old religious books. The question of 'what is the origin of sin and death in the bible' is one that has been pondered for countless generations. It's a deep question that touches on how people understand their own existence and the challenges they face, and that, is that, a very old query.

And then, there's the question of 'who was the first sinner.' To try and answer that last question, people are still looking at these old stories even today. These narratives provide a framework for understanding human actions and their results, shaping beliefs and thoughts over many centuries. It’s a concept that continues to be discussed and interpreted, you know, even now.

In most of the tales told about a figure named Lilith, she often stands for disorder, for drawing people in, and for being against what is considered holy. She's typically shown as a powerful, sometimes frightening, presence. Lilith, in her many forms, has truly held a powerful influence over people's minds and imaginations. She's a character that has left a lasting impression, casting a kind of spell, you know, on humankind through her stories.

Was it adam.levine's Rib - A Biblical Inquiry?

The story of Adam and Eve tells us that the first man, Adam, was made from the earth, from dust. Then, the story goes, the first woman, Eve, was made from one of Adam's ribs. This is a very well-known part of many traditions, setting the scene for how humanity began. It’s a narrative that has shaped a lot of beliefs about where we all come from, you know, a very central tale.

But then, a question pops up, doesn't it? 'Was it really his rib?' This query makes us think a bit more deeply about the details of these old stories. It's a way of looking at the text with fresh eyes, wondering about the exact meaning and what might be implied. It suggests that even in very old and respected writings, there can be room for different ways of seeing things, which is pretty interesting, really.

Lilith, from being seen as a scary spirit to being thought of as Adam's first partner, is a truly powerful presence. Her story is one of transformation and a force that commands respect, or perhaps, a bit of fear. She's a figure who has been interpreted in many ways across different traditions, always carrying a certain weight and influence, basically, a compelling character.

The story of Adam and Eve, as told in the book of Genesis, says that the Creator made the first woman from one of Adam's ribs. This is the common understanding found in that ancient text. It's a simple statement that has had a huge impact on how people understand beginnings and relationships, in some respects, for a very long time.

However, a person who studies old religious texts, Ziony Zevit, has a different idea about this. He suggests something else about that part of the story, which shows that even long-held beliefs can be looked at in new ways by those who study them closely. His perspective offers a different angle on a very old and familiar narrative, causing people to think about it a little differently, you know, even today.

What's the difference between BP and adam.levine's methods?

Recently, people have been looking into how deep learning systems work, and a question often comes up about the BP algorithm. This algorithm has a very important place in how we understand basic brain-like computer networks. It's like the foundation for a lot of what we know about how these networks learn, so, too it's a significant piece of the puzzle.

Even though the BP algorithm is so important to understanding how these networks function, it's not used very often to actually teach the models that are popular in deep learning these days. This is a bit of a contrast, isn't it? Knowing its foundational role, you might expect it to be everywhere, but it's actually quite rare to see it used for training the big, modern deep learning systems. This difference is something that people who are studying deep learning often think about, you know, trying to figure out why.

adam.levine's Influence on L2 Regularization?

Building on the Adam method, there's an improved version called AdamW. This newer method took the original Adam and made it even better. It's like taking something that already worked pretty well and giving it a thoughtful upgrade. So, the point of this newer version was to iron out some of the kinks in the original, basically, making it more effective.

To understand this improvement, it's helpful to first look at the original Adam method and see how it made things better compared to SGD. Adam, as we discussed, made some smart changes to how learning happens. Then, the next step is to see how AdamW fixed a particular problem with the original Adam method: how it made 'L2 regularization' less effective. L2 regularization is a technique that helps prevent the computer system from learning too much from the training information, kind of like keeping it from memorizing instead of truly understanding. AdamW solved that weakness, and that, is that, a good thing.

- How Did Bernie Mac Die

- Rose Mcgowan

- Missing College Student Punta Cana

- Benson Boone Girlfriend

- Castle Series Cast

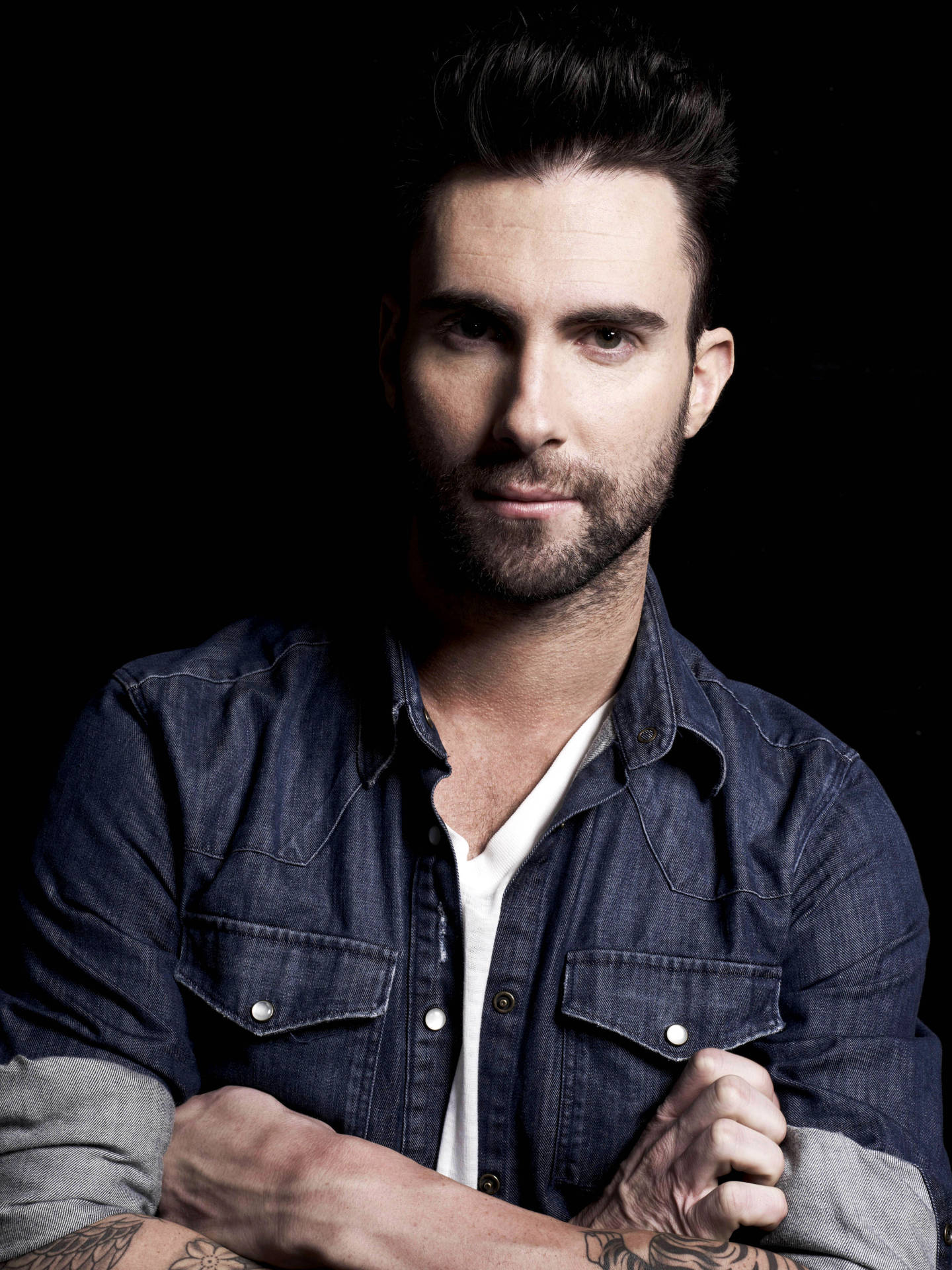

Adam Levine

Top 999+ Adam Levine Wallpapers Full HD, 4K Free to Use

adam levine - Adam Levine Photo (1605643) - Fanpop